For a time-series, the output for a time-step is calculated from the entire history instead of only the inputs and current hidden-state.Distant items can affect each other’s output without passing through many recurrent steps, or convolution layers.Layer outputs can be calculated in parallel, instead of a series like an RNN.This is ideal for processing a set of objects. It makes no assumptions about the temporal/spatial relationships across the data.This general architecture has a number of advantages: A Transformer model handles variable-sized input using stacks of self-attention layers instead of RNNs or CNNs. TransformerTransformer, proposed in the paper Attention is All You Need, is a neural network architecture solely based on self-attention mechanism and is very parallelizable. Sample conversations of a Transformer chatbot trained on Movie-Dialogs Corpus. Input: i am not crazy, my mother had me tested. Implementing a Transformer with Functional API.Implementing MultiHeadAttention with Model subclassing.Preprocessing the Cornell Movie-Dialogs Corpus using TensorFlow Datasets and creating an input pipeline using tf.data.In this tutorial we are going to focus on: This article assumes some knowledge of text generation, attention and transformer. All of the code used in this post is available in this colab notebook, which will run end to end (including installing TensorFlow 2.0).

CONTEXTUAL CHATBOT EXAMPLES HOW TO

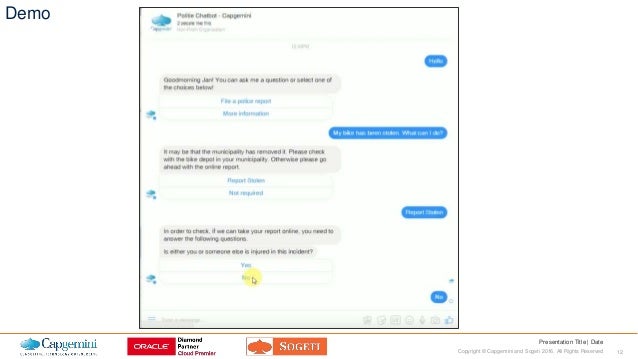

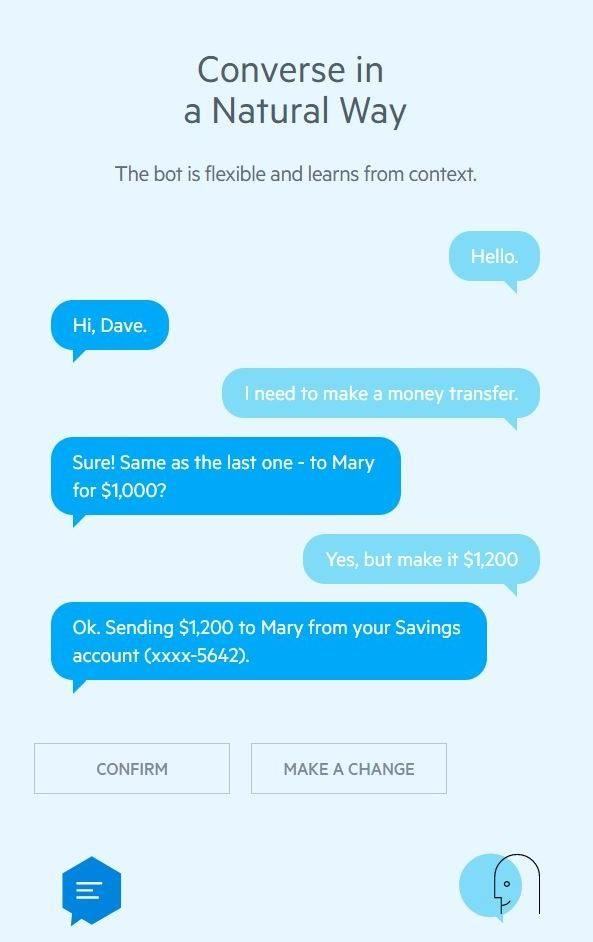

In this post, we will demonstrate how to build a Transformer chatbot.

With all the changes and improvements made in TensorFlow 2.0 we can build complicated models with ease. The use of artificial neural networks to create chatbots is increasingly popular nowadays, however, teaching a computer to have natural conversations is very difficult and often requires large and complicated language models.

0 kommentar(er)

0 kommentar(er)